An example from New Zealand shows how explosive such errors can be: When identifying individuals at border controls, the focus clearly needs to be on the passport photos. However, an AI passport recognition system rejected Asians because the system interpreted asiatic eye shapes as closed. Systematic errors in predictive models can thus not only lead to incorrect results, they can also be unintentionally discriminatory. Here we present a method for avoiding, detecting and correcting such biases, which is already being used successfully in many projects.

Causes for bias

Systematic errors in predictive models, for example, are mainly due to poor data quality or incorrect use of data.

Lack of data quality

Non-representative samples and discriminatory data

The training data samples for an AI algorithm must be representative, otherwise subgroups with certain characteristics may be represented more frequently than in the actual total. For example, data collected online comes much more frequently from young people than from seniors – a distribution that does not, however, correspond to the real proportions in the population as a whole. The result is a disproportionate weighting of the characteristics of young people, with the risk of distorting the results for other age groups.

The same is true if the training data is from discriminated groups. For example, if minorities were disadvantaged in old lending decisions, then the legacy lending data set is biased to the disadvantage of those minorities. Even when the discriminatory behavior has long since ended, without countermeasures the AI model persists and perpetuates the disparaging patterns preserved in the old training data. Moreover, it is irrelevant whether these groups are intentionally or unintentionally disadvantaged. Without countermeasures, this bias will negatively affect the predictive performance of an AI model.

Incorrect Data Usage

Incorrect handling of otherwise error-free data can also lead to bias. The four main causes are:

Integrated Solution Approach

Given the multiple causes, a process to effectively prevent bias must consist of several components. This is the only way to ensure that all error sources are taken into account. For this purpose, we recommend an integrated three-part approach that has proven its worth many times:

1. Avoid bias

Prevention is better than cure. Staff training and awareness-raising among those involved in development and operations are therefore effective levers for avoiding bias in the first place. Also useful are training concepts that convey special methodological, ethical and organizational content. It makes sense to impart this knowledge not only to the company’s own employees. Suppliers should also be appropriately screened for knowledge of anti-bias.

The creation of standards also counteracts bias, including the standardization of all development steps and control of their compliance. Comprehensive versioning, documentation, access controls, and logging of all AI input variables (features), AI model codes, and AI results, as well as strict data governance are all important. They have all been proven to avoid bias due to methodological errors, ignorance, or intentional incorporation of errors.

Finally, logging the assumptions and intended use of the final AI model prevents incorrect use of the AI results.

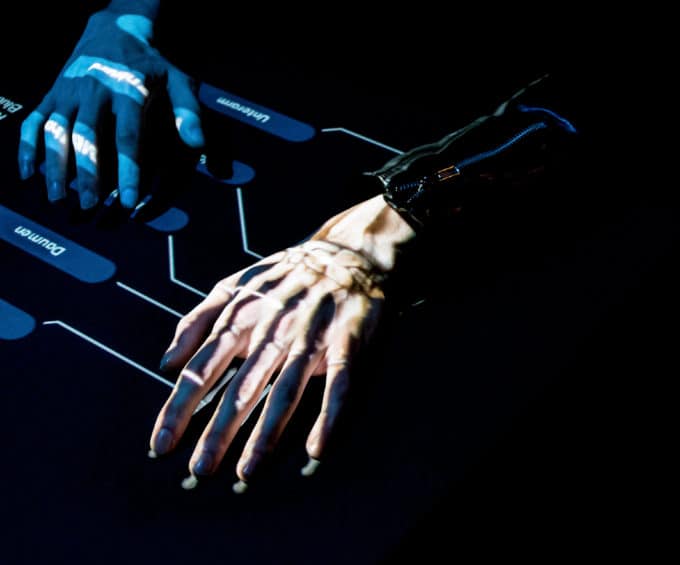

2. Detect bias

Experts are able to detect a systematic error in an AI model via the qualitative evaluation of input variables and results. The expected distribution values of a bias-free population are methodically compared with the input variables of the model in question. Abnormalities indicate data collection and processing errors.

Another key requirement for any plausibility check is transparency. The influence of individual variables on the model result as a whole must therefore be comprehensible. In this respect, there are significant differences between the algorithms. Accordingly, simpler models such as logistic regression are very transparent. For more complex models, indirect methods such as SHAP analyses (SHapley Additive exPlanations) are used to test individual influencing factors. Fairness measures can also be used to examine whether model outcome quality is similar across representations.

3. Correct bias

If bias occurs due to poor data quality, it can be eliminated by data set weighting, stratified random sampling (stratifying), or sampling strategies. The goal is always to obtain a weighting of the variables as in the data without bias.

An example would be a male-female ratio that is expected to be 50/50. However, if a data set contains 33 percent males and 67 percent females, double weighting each male data set would be appropriate as a correction to restore the expected relationship. Potentially bias-causing input variables such as migration background or religion are best excluded right at the start of development.

On the other hand, however, this data may reveal that minorities are overrepresented. If the AI model is compromised in this respect, the additional inclusion of such a variable may even prevent bias.

It is also important to ensure that features excluded due to bias are not included again in the results through the back door. To stick with the male-female example, it makes little sense to delete the gender variable but to train the algorithm with gender-specific first names.

If subjective statements in manual data preparation are the cause of a bias problem, multiple surveys and additional test questions can remedy the situation.

Conclusion

Bias is an intrinsic problem of all human work, since there is no such thing as a perfectly and absolutely objective human being. AI has the advantage of being objective if it has been trained appropriately and only receives bias-free data. To achieve this, developers and users must always be aware of the causes of systematic errors. With the presented solution kit, they can succeed in minimizing bias to get realistic results from their AI model.

Recommended further reading on that topic: How to Deliver Powerful Business Results with Responsible AI

Find out more about AI here:

About the Authors

Jakob Gliwa

Associate DirectorBerlin, Germany

Jakob is an experienced IT, Artificial Intelligence and insurance expert. He led several data-driven transformations focusses on IT-modernization, organization and processes automation. Jakob leads BCG Platinion’s Smart Automation chapter and is a member of the insure practice leadership group.

Dr. Kevin Ortbach

BCG Project LeaderCologne, Germany

Kevin is an expert in large-scale digital transformations. He has a strong track record in successfully managing complex IT programs – including next-generation IT strategy & architecture definitions, global ERP transformations, IT carve-outs and PMIs, as well as AI at scale initiatives. During his time with BCG Platinion, he was an integral part of the Consumer Goods leadership group and lead the Advanced Analytics working group within the Architecture Chapter.

Björn Burchert

Principal IT ArchitectHamburg, Germany

Björn is an expert for data analytics and modern IT architecture. With a background as data scientist he supports clients across industries to build data platforms and start their ML journey.

Oliver Schwager

Managing DirectorMunich, Germany

Oliver is a Managing Director at BCG Platinion. He supports clients all around the globe when senior advice on ramping up and managing complex digital transformation initiatives is the key to success. With his extensive experience, he supports clients in their critical IT initiatives, ranging from designing and migrating to next-generation architectures up to transforming IT organizations to be ready for managing IT programs at scale in agile ways of working. He is part of the Industrial Goods leadership group with a passion for automotive & aviation.