Bank customers are increasingly operating digitally, whether they do money transfers, trade securities, or take out loan agreements. As a consequence, they expect financial institutions to provide the same services that they enjoy when shopping online: 24/7 availability, personalized interaction, and relevant offers. Credit institutions are also feeling the heat from a growing number of new payment services in the market (such as Apple Pay, Ali Pay, PayPal) or Neobanks.

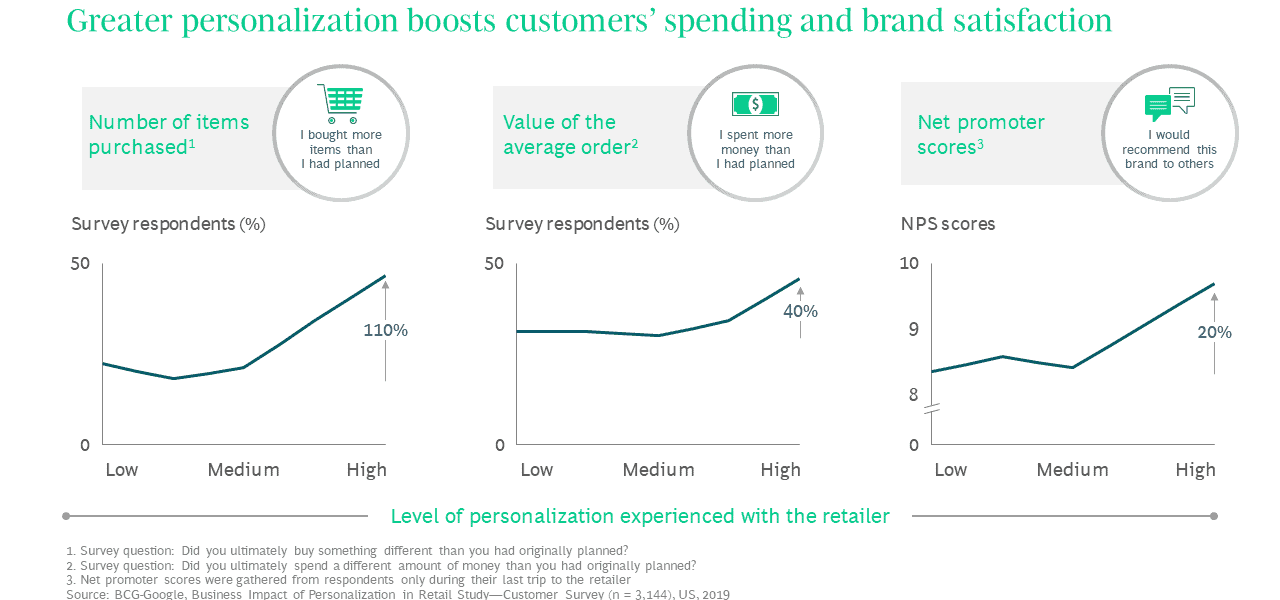

To remain competitive, banks must do more than just offer new services and products on digital channels. They must also adapt their sales and marketing activities on these channels. According to a study by BCG and Google, personalized content and relevant offers tailored to the individual needs of each customer significantly increase eCommerce sales. The study found that consumers are 110 percent more likely to add more items to their shopping baskets.

The universal banks are very well positioned to meet this challenge because they possess the strongest currency of the digital age: data. Banks using their data effectively as their artificial intelligence (AI) applications gain new, value-added access to their customers.

In addition to offering customers the right product through the channels they use, it is also important to take action at exactly the right time, i.e. when they are open to that proposal. The timing is particularly crucial for conversion. Real-time data processing also opens up the possibility of seizing the moment.

But what does “real time” mean? There is no clear-cut answer to this question, as the perspective may vary depending on each individual business case. Consequently, business and IT define the term differently, which leads to misunderstandings. Technically speaking, “real-time data processing” means that data is processed and displayed within a period of up to 20 milliseconds of being received. Due to its flexible definition, real time in other business cases might mean that processing, including analysis, is completed within milliseconds or minutes of data being received. Specific time constraints for processing windows thus have a significant impact on the solution and its implementation costs.

“Real time often has different meaning for business and IT leading to misunderstandings of the effort required to develop business use cases.”

"Financial data contains valuable information about customer behavior

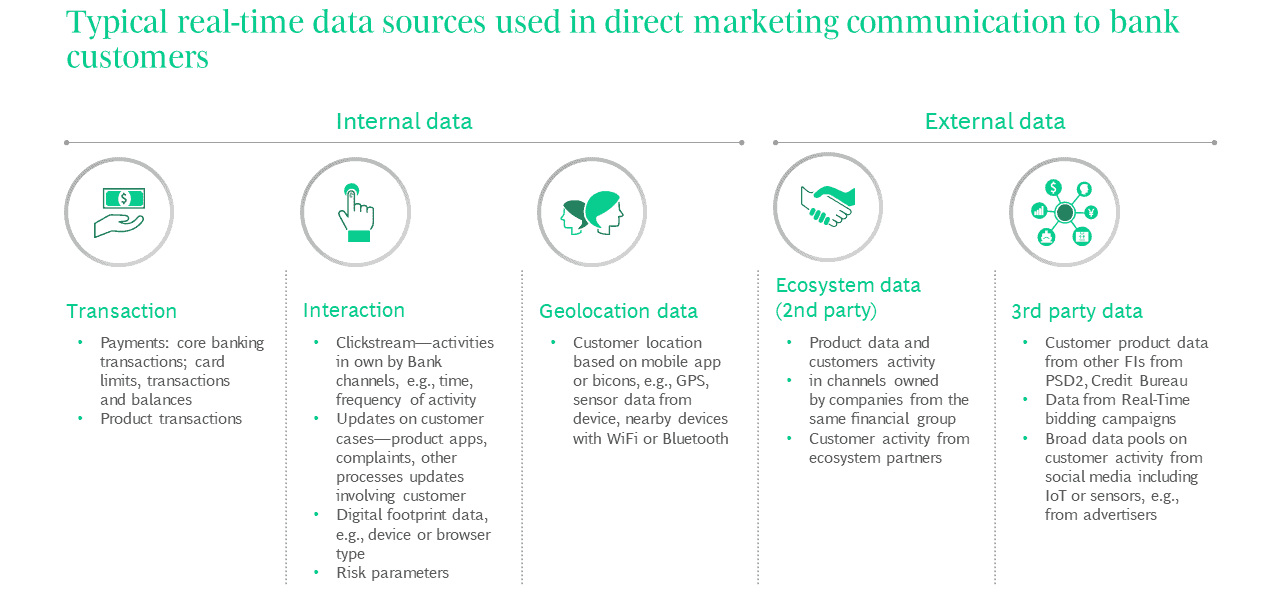

Banks have numerous data sources that can be utilized for direct marketing communications. Every transaction or channel interaction linked to geolocation carries valuable data concerning customer behavior and preferences. More and more financial institutions are leveraging data from external sources to increase customer insight, including data from partner ecosystems, real-time bidding campaigns or social media.

The data is available, but it can only be used to create value if it is processed in a targeted manner. This is the only way to utilize data for decision-making processes, e.g. whether or not to make the customer a particular offer.

Practical solutions for key challenges in real-time data processing

Based on our project experience and consulting practice, we have developed practicable solutions for typical problems in real-time data processing.

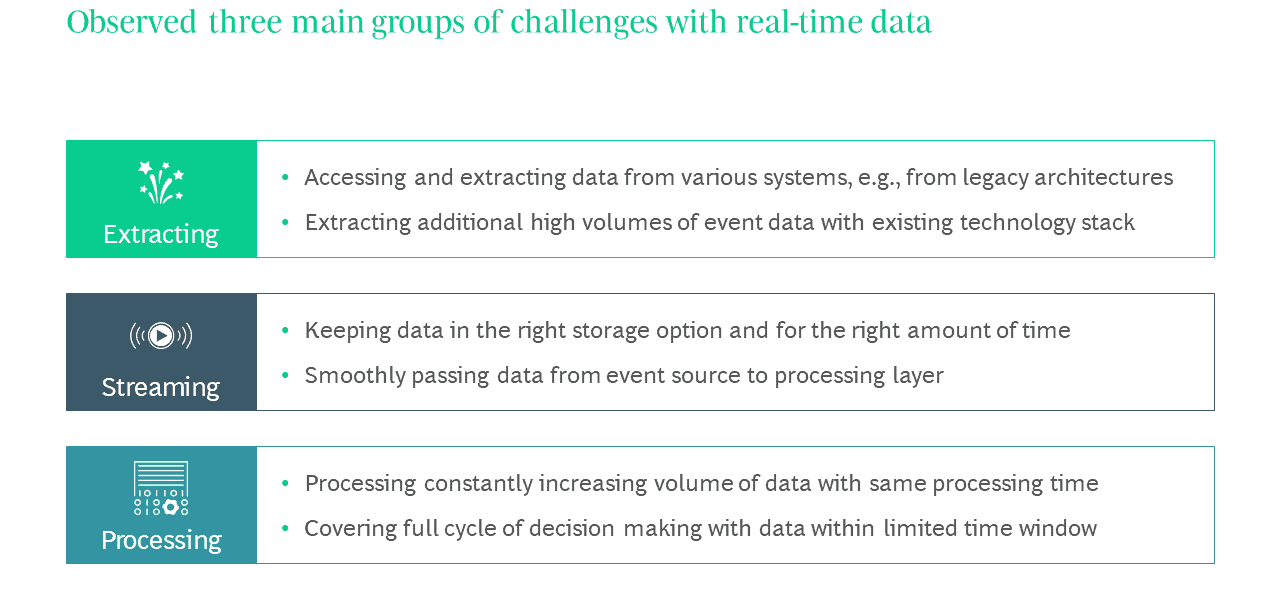

1. Extracting

Key challenges: In many cases, the closed architectures of core banking systems and product systems limit the ability to access and modify their source code. In addition, extracting additional large volumes of data places a significant burden on source systems. This can result in service level agreements not being met and increased maintenance costs for source systems.

Best practices: Therefore, credit institutions should avoid using direct product systems for real-time data collection, if possible. Instead, data should be collected and processed using additional tools or platforms to offload core banking systems. Data should also be captured incrementally using Change Data Capture (CDC), which has no impact on the performance of the database or applications. CDCs capture only changed data and limit the amount for processing. Accordingly, there are several options with different performance characteristics. In addition, database dumps should be avoided.

2. Streaming

Key challenges: The constant flow of event data raises the question of how to choose the appropriate storage option. The first issue to be addressed here is whether persistent data should be retained – and for how long after the data format changes. The second issue pertains to changes in data models in constant event flows that cause disruptive data drifts and break pipelines, which in turn slow down analytics and increase expenses.

Best practices: To avoid processing delays, we recommend designing a cost-effective and scalable event-based architecture with the most suitable storage option for streaming. Predefined rules are helpful in handling data deviations automatically. It is important to address all types of changes to ensure that corrections can be made easily.

BCG Platinion has analyzed different open-source streaming data storage options – both for streaming and for stream and batch processing. The framework for selecting the best storage option should not only consider the total cost of ownership (TCO). It should also take into account the architecture’s agility in terms of extension, ease of scalability and data analytics, e.g. availability of an SQL interface for querying data.

3. Processing

Key challenges: Rapid development of technology has enabled access to huge volumes of data sources, events, and signals. As a result, current IT architectures must quickly process ever-increasing volumes of data, such as geolocation information. Another challenge concerns the very limited time window for data processing, from extraction and analysis to decision-making.

Best practices: Implementing a Complex Event Processing (CEP) component can solve these problems. It can process large amounts of data and, once a relevant business event has been identified, it can update the Real Time Marketing (RTM) engine to trigger an appropriate customer-facing action. Asynchronous responses and aggressive response timeouts ensure timely decision-making in all cases.

Real-time data processing architecture should be taken into account, including latency, processing time window, volumes of raw data, complexity of business events detection and performance of the RTM engine.

Real-time data processing gives banks new opportunities to position themselves vis-à-vis their customers with personalized offers and services. But building the necessary technical infrastructure is not just a matter for corporate IT.

Marketing and sales must also follow suit. Marketing must ask itself which customer needs should be satisfied in real time. Sales is looking for solutions to develop scalable offerings. The task is complex. Therefore, it also requires external expertise in many cases.

Modern banks are very specifically exploiting opportunities to generate data from mobile and digital offerings.

"Conclusion

Competitive advantages in the banking sector are not generated by products, but by combining internal and external data with the ability to use them profitably.

Traditional bank branch sales are increasingly shifting to digital channels. Modern banks that successfully market their products rely on personalization, real-time data processing and customer insights. This information allows them to design personalized offers, expand their range of services to suit their target groups, and build a one-to-one customer relationship in real time.

Find out more about the future of financial institutions here:

About the Author

Grzegorz is heading our Financial Institution practice in CEE for BCG Platinion and focuses on CBS implementation, IT Architecture Design, Program Management, Post Merger Integration, Digital Transformation and System Integration. He has about 20 years of experience in consulting and helps businesses and organizations redefine how they serve customers and operate their enterprises.